It's 2022 and Data Labeling Still Sucks

March 21,2023Intro

You've heard it before. Labeling data for machine learning sucks. Labeling is laborious, time consuming and difficult to scale.

Despite all the innovations in AI, data labeling has largely remained the same over the last decade. However, as the race to build AI heats up, companies have begun investing heavily in getting their labeling strategy right as good datasets are the key to building great AI systems.

The race to build datasets has created a billion-dollar labeling industry

The explosion of data labeling tools, platforms, and services has created a billion-dollar industry. New technologies include AI-assisted labeling, dataset versioning, and cloud infrastructure that enables labeling at scale. Sources including Grand View Research estimate data labeling market revenue to grow to US$38.1 billion by 2028.

In our previous article, we examined the pros and cons of various data collection strategies. While data collection remains the most critical stage in the pipeline, data labeling comes in at a close second in its impact on AI performance and robustness. In this article, we’ll examine how data labeling affects AI performance, and how you can decide on a labeling strategy that’s best for your needs.

Labeling for computer vision

The dominant method to build robust computer vision systems in production is supervised learning, where AI models are trained on image-label pairs.

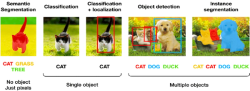

Different computer vision tasks require different types of labels. Classic vision tasks often make use of class labels, bounding boxes, keypoints, and segmentation masks. These labels are easily collected by human effort, and may be automated to a limited extent.

As AI systems mature to tackle ever harder vision problems over the years, they have become hungrier for richer and more complex labels, requiring depth maps, 3D point clouds, voxels, or meshes, and temporal labels such as optical flow maps. These types of labels are often used in advanced tracking systems, robotics, and autonomous vehicles. They are typically harder to acquire, since they must be collected using specialized sensors alongside raw data.

Finally, the more advanced and specialized the task, the more often you'll require custom labels, such as text captions for image captioning, or scene graphs for scene parsing or visual question answering.

How does labeling affect AI performance?

The better quality labels we provide, the better the AI performance. Much like an error in the test answers for an examination, wrong or inaccurate labels can confound the training process.

Acquiring these labels at scale has proved challenging but surmountable with enough capital and effort. However, ensuring the acquired labels are both correct and consistent is no easy feat.

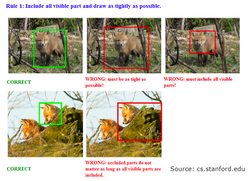

One of the biggest problems in data labeling is label inconsistency. This refers to data that has inconsistent or conflicting annotations - resulting from human error, unclear assumptions and instructions, ambiguity in the images, or the subjective nature of the task. For every new label, class, and scenario, labelers must be trained to follow strict guidelines to reduce errors, biases, and subjective labels.

If a dataset has inconsistent labels, models will have trouble learning the correct relationships between images and labels. While a small amount of noise and error can be desirable to help with generalization and reducing overfitting, too much can be detrimental.

Additionally, numerous revisions and quality checks are required to ensure that labels are clean and ready for training. Unfortunately, ensuring pristine labels usually means lengthier turnaround times.

To better understand the impact of the labeling process on AI performance, we’ll look at specific types of labeling issues in the next section.

Types of labeling problems

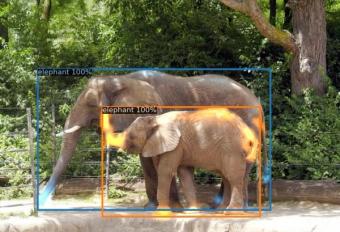

In computer vision, labeling errors can include misclassifications, where labelers assign incorrect classes to objects; and inaccurate localizations, where labelers draw coarse or misaligned bounding boxes and segmentation masks out of fatigue or convenience. Since individuals introduce their own habits and biases when labeling, datasets can have inconsistent classes and localization accuracy.

Training models on these datasets can result in poor localization performance, and false or missed detections. In the first example, shipping containers are grouped together coarsely, causing two serious issues. Firstly, a detection model would be penalized for correctly detecting individual containers, since the labels are grouped. Secondly, it may learn to correlate areas of the background included in the grouping with the containers themselves. Inference on similar backgrounds may then trigger false positive detections.

Other examples of label errors are shown below, including blobby segmentation masks and incorrect bounding boxes.

Missed labels are common in large datasets with small objects, where humans are unable to identify and label all the relevant objects. The inverse problem, where humans label objects that shouldn’t be there, is less common, but not entirely unheard of.

Labeling subjectivity is a common issue when labeling requires human expertise. For instance, in cancer diagnosis, the exact same medical image may be labeled as benign or malignant by different doctors. In geospatial analysis, identifying and labeling aircraft from satellite images can also be inconsistent, since experts need to identify aircraft despite modifications, attachments, paint jobs, decals, and camouflage, to the best of their ability. Subjectivity can also arise when instructions are incomplete or unclear, such as when deciding if and how truncated or faraway objects should be labeled.

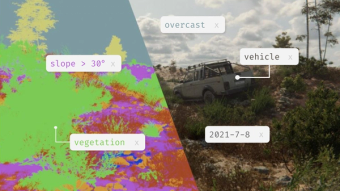

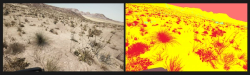

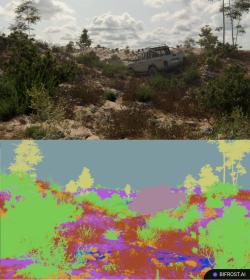

The inherent difficulty of the labeling task can also amplify labeling errors and subjectivity. In the Bifrost-generated desert scene below, grasses and shrubs must be annotated with their pixel-level segmentation masks (on the right). Imagine how difficult and time-consuming it would be for a human to do this!

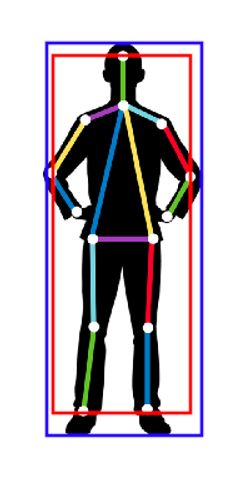

Derived labels can also cause problems. To illustrate this problem, consider two methods to create rectangular bounding boxes. One option is creating a bounding rectangle around keypoints of the object (red rectangle). Another option is to draw the bounding rectangle around the segmentation mask of the object (blue rectangle). These two methods can lead to vastly different results. Mixing or mis-specifying these calculations can result in label inconsistency.

Finally, the choice of labels when defining the business or research problem can have a profound impact on the resulting label quality. Choosing the wrong types of labels (for instance, horizontal bounding boxes rather than oriented bounding boxes) can lead to poor performance and poor fit for your use case. In the design and specification phase, defining the problem and technical scope clearly is highly critical.

Labeling methods

In-House Labeling

There are numerous basic labeling tools perfect for classic computer vision problems, such as image classification, object detection, and segmentation. They’re usually open-source and offer a bare-bones approach to manual labeling.

These tools are perfect when you need to “get the job done”. They’re often used by AI teams themselves to label small sets of images, and when data is sensitive and cannot be exposed to crowdsourced workers.

They usually run as standalone browser or desktop applications, and offer a graphical interface for users to create class labels and draw shapes such as bounding boxes, polygons, and keypoints. They may also offer basic sorting, filtering and tagging features to streamline the labeling process.

Pros

- Fastest way to label small datasets

- Data stays in-house, great for privacy-sensitive data types and products

- With domain expertise from the AI team, an in-house approach can offer better label quality, reducing labeling errors

- Recent open-source tools have been improving in efficiency and user-friendliness, and even offer features such as AI auto-labeling

Cons

- Less hires, slower labels. Labeling speed is directly related to the number of labelers working on the task. For in-house labeling, the primary bottleneck is allocating manpower for labeling tasks.

- Some use-cases may require additional domain expertise, e.g. for the medical and geospatial examples discussed above.

Examples of basic in-house labeling tools

Crowdsourced Human Labeling

Robust, well-trained models can require millions of labels per dataset. At this scale, in-house solutions start to become inefficient. Crowdsourcing has been a popular approach to labeling at scale, where remote workers are paid to annotate images.

Crowdsourcing is the go-to option when the amount of data exceeds quantities in-house teams can handle. However, since there is no control over labeler quality, clear instructions and detailed guides needed for the labelers’ reference - examples and standardized approaches to handle edge cases, ambiguous objects, occlusion, and overlaps.

A crowdsourcing approach can be combined with free or paid labeling tools for greater efficiency and label quality. However, recent studies have shown that crowdsourcing can result in poor quality labels that ultimately affect AI performance.

Pros

- Fast way to label large datasets

- No internal effort required. Crowdsourcing platforms allow thousands of contractors to label data.

Cons

- Expect lower quality labels, chiefly because some labeling tasks require deep domain expertise, and non-expert labelers tend to interpret data differently.

- Extra effort needed to ensure quality control (QC). Preemptive measures could include creating detailed guides and ensuring workers adhere to them, while remedial measures could include multiple stages of QC after labeling, ensuring adherence and consistency.

Examples of crowdsourcing platforms

Enterprise labeling tools

Since 2018, there has been an explosion of enterprise labeling tools, contributing to the labeling industry’s billion-dollar status today.

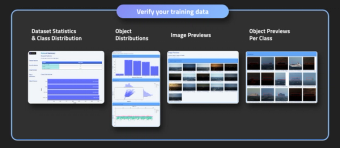

Today’s enterprise labeling platforms mostly adopt a hybrid labeling strategy. Images are initially “pre-labeled” by AI models. Errors in these labels can be found through filtering results by confidence scores and other designed or learned rules. Human workers then review and correct the annotations, which can then be used to improve the pre-labeler models further.

For a detailed overview of features and pricing across most labeling platforms, check out V7’s comparison article!

Pros

- Fast way to label multiple large datasets

- Data management is built into some platforms

- Dedicated support for the labeling pipeline

Cons

- Does not solve human errors, missed labels, and subjectivity in labeling

- A portion of your dataset may end up with AI-assisted labels, which can contain biases

- Plans often scale with dataset size for enterprise customers

- Other than submitting specific datasets to each vendor to compare labels, there is no way to accurately gauge label quality across platforms

Examples of enterprise tools

Synthetic data

Synthetic data offers an alternative to having to label data at all. While human-created labels can be inconsistent and inaccurate, synthetic data offers computer generated and verified labels. This enables creating new products and applications that leverage label types such as ultra-detailed segmentation masks, 3D cuboid annotations, depth maps, and optical flow maps, which are otherwise impossible to obtain via human annotations.

Pros

- Computer generated pixel-perfect labels

- Synthetic data solutions are useful for labels near impossible to acquire via human effort

Cons

- Machine labels, though pixel-perfect, can differ from human labels. When testing for performance on human-labeled test sets, we may need to reintroduce imperfections

- It can’t label the existing dataset you have on hand. However, like human labels, synthetic data can be used to train AI models to pseudo-label data, although QC is still required.

Conclusion

Many companies are starting to recognize that labeling is a laborious workflow that can be improved via better tools, platforms, and AI assistance. Labeling strategies range from using open-source, crowdsourcing, and enterprise tools, to completely reformulating the problem and utilizing synthetic data.

If you already have data on hand, you should get it labeled using some of these great tools.

At Bifrost, we’ve had great success using only synthetic data for training and all our real data for testing, since we believe that rare real-world examples are extremely valuable for battle-testing algorithms instead of training on them.

Other independent researchers have reported similar successes, both training with pixel-perfect synthetic data labels alone, and combining them with noisy human-labeled data.

This enables an alternative workflow, where human-labeled data is used as a comprehensive test dataset. Training can be done on either synthetic data alone, or combining synthetic data with small amounts of your labeled data. That way, we increase our test coverage on difficult and rare scenarios in real data.

For a more detailed look at synthetic data and its uses, check out our blog posts!