Similarity and Diversity: The Core Foundations of Robust Computer Vision Models

August 25,2023

In the vibrant field of artificial intelligence (AI), computer vision stands out as one of the most fascinating and rapidly evolving sub-disciplines. Its goal, put simply, is to enable machines to 'see' and understand the visual world around them. Achieving this requires models that are both accurate and robust, capable of handling an array of scenarios. This is where the twin concepts of similarity and diversity come into play, serving as the critical building blocks for these robust models.

Similarity: Bridging the Domain Gap

The first of these pillars, similarity, plays a critical role in reducing what experts refer to as the 'domain gap'. In essence, this refers to the difference between the environment in which a model is trained, and the one in which it is subsequently deployed.

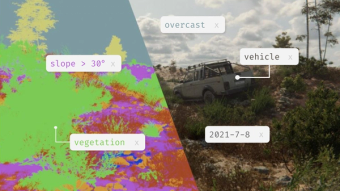

For example, consider a computer vision model developed to help drones navigate. The training imagery should be generated on the same hardware as will be used in the drone - meaning that resolution, field of view, camera height & angle, jitter, blur, pitch, roll, and speed should all be matched as closely as possible to the real-world use case.

Furthermore, to accurately bridge the domain gap, other unique factors pertaining to the use case need to be considered. This could include the distances at which objects may appear, their orientations, the typical speed at which the drone will be moving, and so on. By controlling for these elements, we ensure our models are trained on data that closely mirrors the conditions they'll encounter in the real world.

Diversity: Creating Versatile Models

Once we have achieved similarity, it's time to add a twist: diversity. In computer vision, this is all about adding a wide range of variation to the aspects we did not control for in the similarity settings.

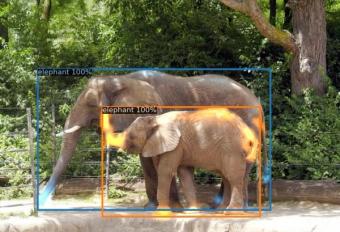

In our drone example, this could mean diversifying aspects like the textures of the landscape, sizes of objects the drone may encounter, different weather conditions, varying lighting situations, and so on. It might seem counterintuitive, but this diversity in training data helps to create models that are more adaptable and capable of dealing with a host of scenarios they may encounter.

The objective here is to ensure the model is not only proficient in dealing with conditions similar to those it was trained on but can also handle situations that deviate from those conditions. By diversifying the training data, we equip the model with the capacity to deal with a wider array of situations, boosting its robustness and generalizability.

Harmonizing Similarity and Diversity

So, how do these two seemingly contradictory factors work together? It's all about balance. Achieving similarity allows our model to understand the specifics of the context in which it will operate, enhancing precision. Introducing diversity, on the other hand, equips the model to manage scenarios it hasn't encountered during training, thereby boosting its adaptability.

The key lies in deliberately controlling for certain conditions (the similarity) and intentionally diversifying others (the diversity). This allows us to train models that are specifically tailored to our use case, yet are flexible enough to handle unexpected scenarios or changes in the environment.

In conclusion, building robust computer vision models is a delicate dance between similarity and diversity. By leveraging both these elements, we can create models that are not only accurate but also versatile and adaptable - a combination that is indispensable in the rapidly evolving realm of computer vision. This delicate balancing act not only improves model performance, but it also propels us forward in our quest to create AI that truly understands and interacts with the visual world.